Highlights

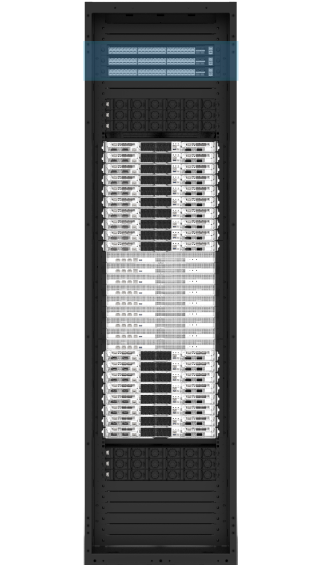

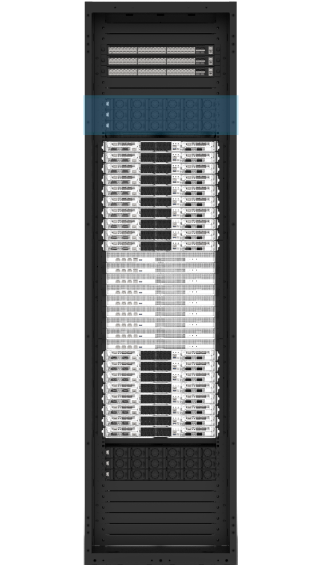

- 36 NVIDIA Grace CPU Superchips

- 72 NVIDIA B200 Tensor Core GPUs

- CPU and GPU Connected by 5th Gen NVIDIA NVLink Technology

- Built for Real-Time Trillion-Parameter Inference and Training

- Front Access IT Equipment for High Serviceability

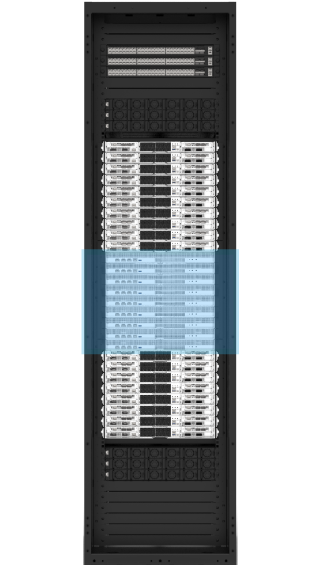

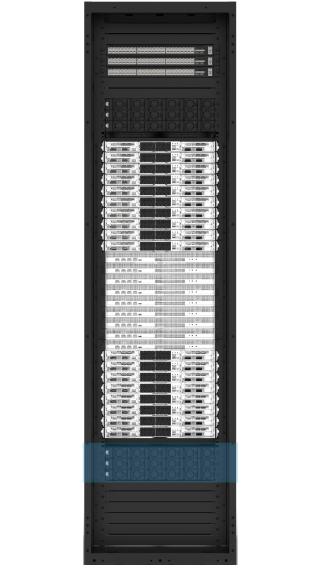

Rack-scale Architecture

-

Design Details

2 x Management Switches

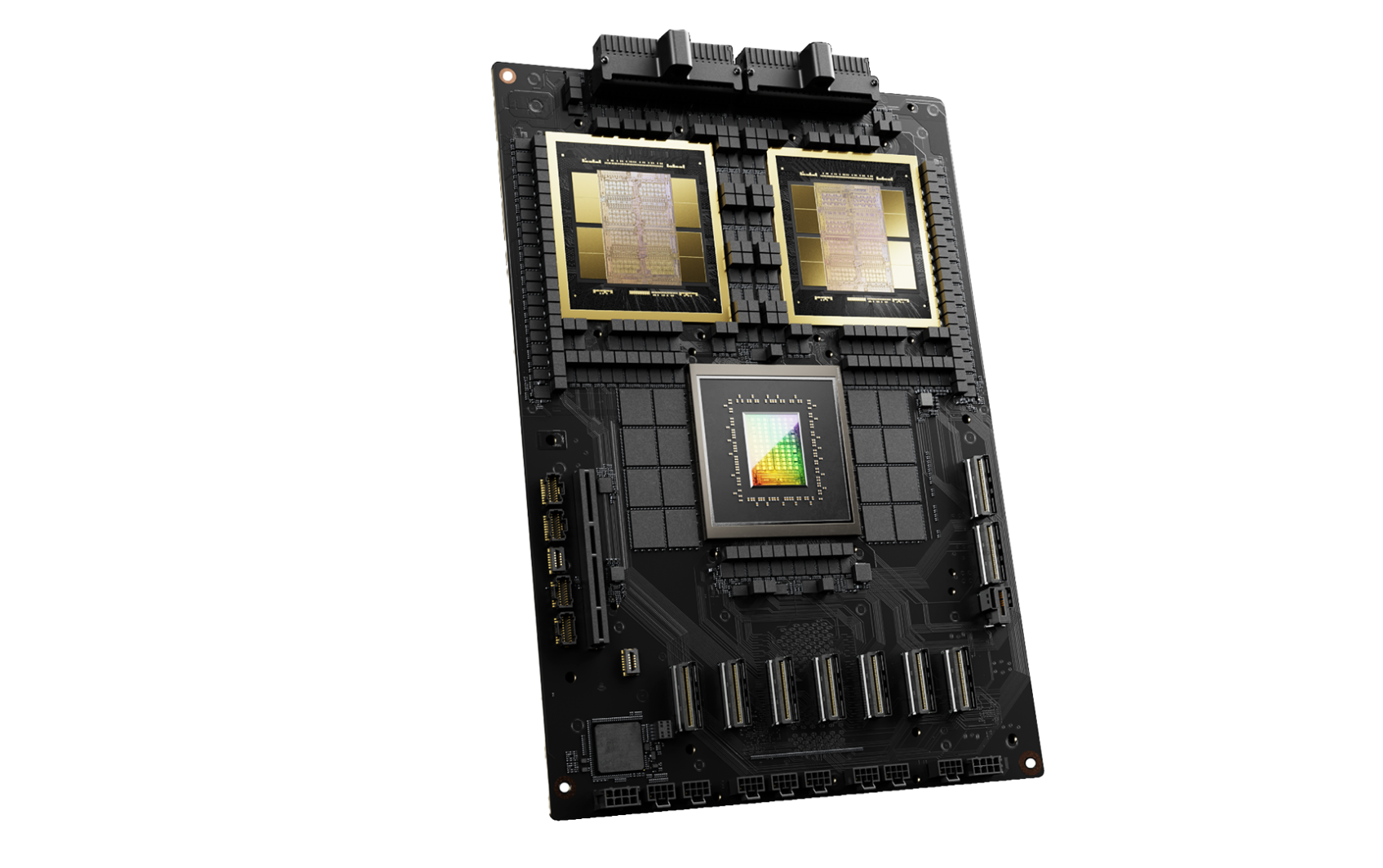

Key Components of NVIDIA GB200 NVL72

GB200 Grace Blackwell Superchip

As the powerful core of the NVIDIA GB200 NVL72, the GB200 Grace Blackwell Superchip combines two Blackwell GPUs with a Grace CPU over a high-speed 900GB/s NVLink chip-to-chip interconnect to propel data processing and calculations for actionable insight.

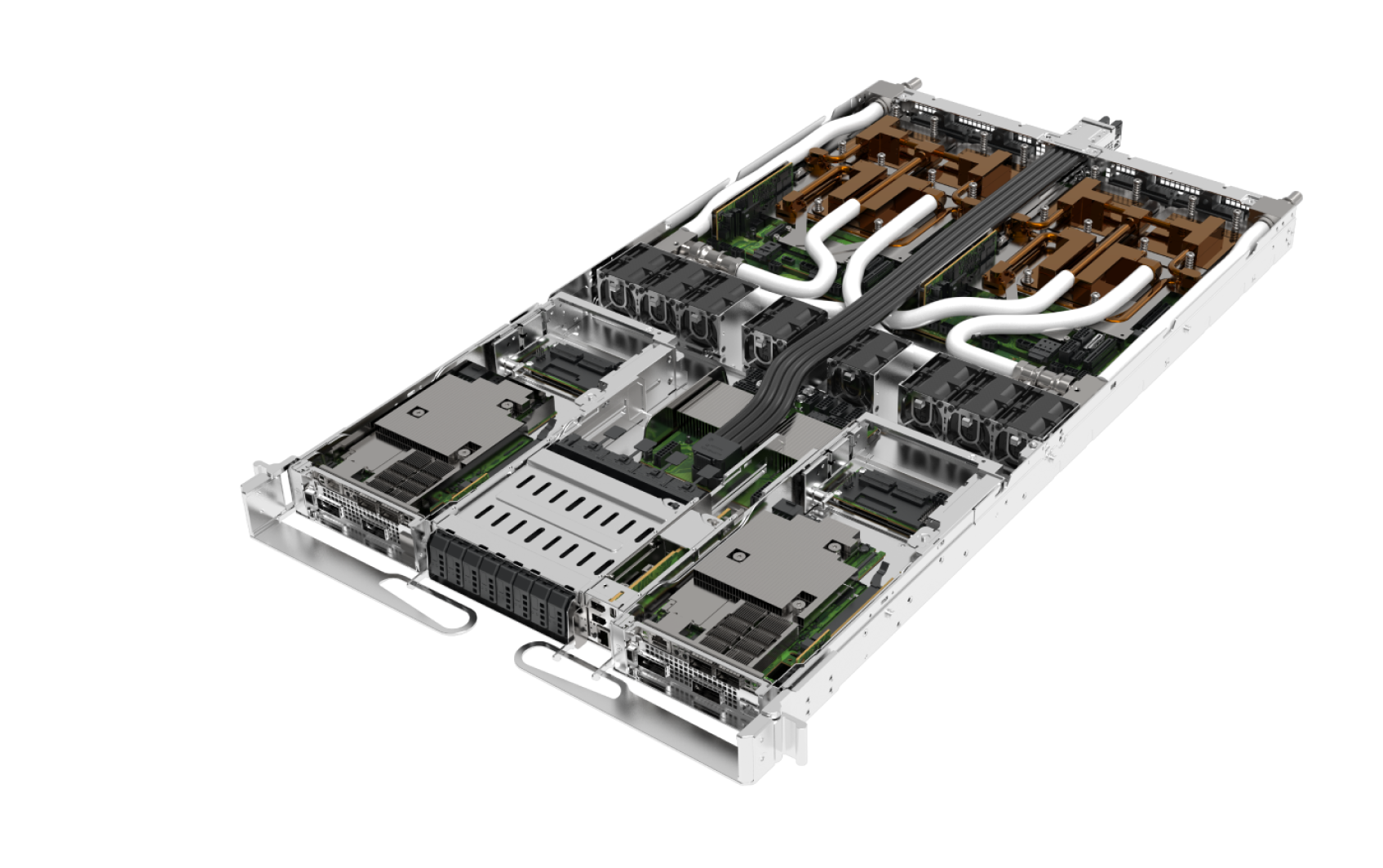

Blackwell Compute Node

The Blackwell compute node, designed with liquid-cooled MGX architecture, is powered by two GB200 Grace Blackwell Superchips. Delivering 80 petaFLOPs of AI performance, it stands as the most powerful compute node ever created and can scale up to the GB200 NVL72 for even greater performance.

The Next-Gen AI Data Center Solution

Liquid-to-Air Solution

- Support Superior Cooling Capacity up to 80kW

- All Heat Dissipation Removed by Fans

- Ideal to Upgrade Existing Air-Cooled Data Center

Liquid-to-Liquid Solution

- Provide Extreme Cooling Capacity up to 1300kW, supporting 4 or more AI Racks*

- Main Heat Dissipation through Facility Liquid

- Enable High-density Computing while Reducing Energy Consumption Significantly

*Depending on the power consumption of IT racks