Built for AI. Engineered for Speed.

AI applications — whether for large-scale training, real-time inference, or massive data processing — rely not only on powerful GPUs, but also on high-performance storage to ensure efficient data movement and sustained throughput. During GPU-accelerated training or inference, datasets and model parameters would be transferred from storage to GPU memory for computation. When storage I/O is too slow, GPUs are left idle, wasting valuable GPU resources and extending the overall training or inference time.

As a result, high-bandwidth storage has become a mission-critical element of modern AI infrastructure. Ingrasys high-performance storage addresses this critical bottleneck with its disaggregated architecture using NVMe-over-Fabrics (NVMe-oF) to enable direct, low-latency data transfer from SSDs to GPU memory over Ethernet. By minimizing data path latency, this ensures AI workloads operate at peak GPU utilization, accelerating time-to-insight and maximizing efficiency.

Highlights

Easy Scalability for Expanding Data Needs

Built on a fully disaggregated architecture by decoupling storage and compute, the storage system enables organizations to scale storage resources independently from compute, ensuring optimal efficiency as data demands grow. This flexible architecture makes it ideal for AI and HPC environments that require frequent scaling, delivering capacity, and agility needed to keep pace with the modern evolving workloads.

.

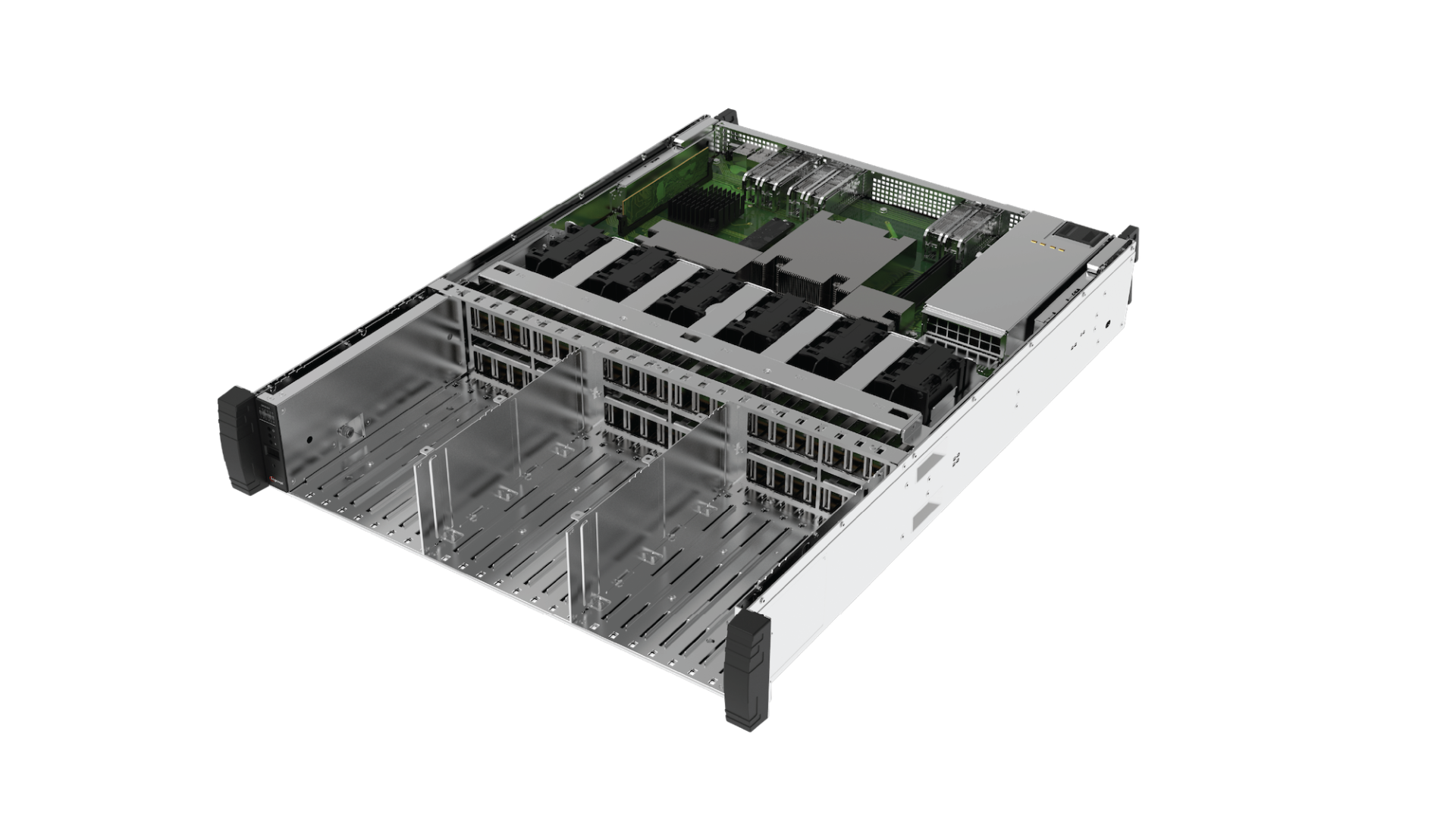

Innovative and Patented Design

The storage system boasts its multiple patented designs. Its virtual midplane optimizes airflow throughout the chassis, ensuring consistent cooling for all components while eliminating a single point of failure. Engineered with exceptional serviceability in mind, the switch module and drive carrier allow for quick and hassle-free component replacement, enhancing operational reliability and minimizing downtime.

Technology

NVMe-over-Fabrics (NVMe-oF)

NVMe-over-Fabrics (NVMe-oF) is a protocol that extends the high-speed benefit of NVMe storage beyond the local server, enabling data transfer between storage systems and host servers over common network fabrics such as Ethernet, InfiniBand, or Fibre Channel. Traditionally, NVMe devices are connected directly to a server’s PCIe bus. NVMe-oF removes this limitation by allowing remote NVMe storage to be accessed almost as fast as local SSDs, reducing latency and enhancing overall storage efficiency in distributed environments.

Featured Product

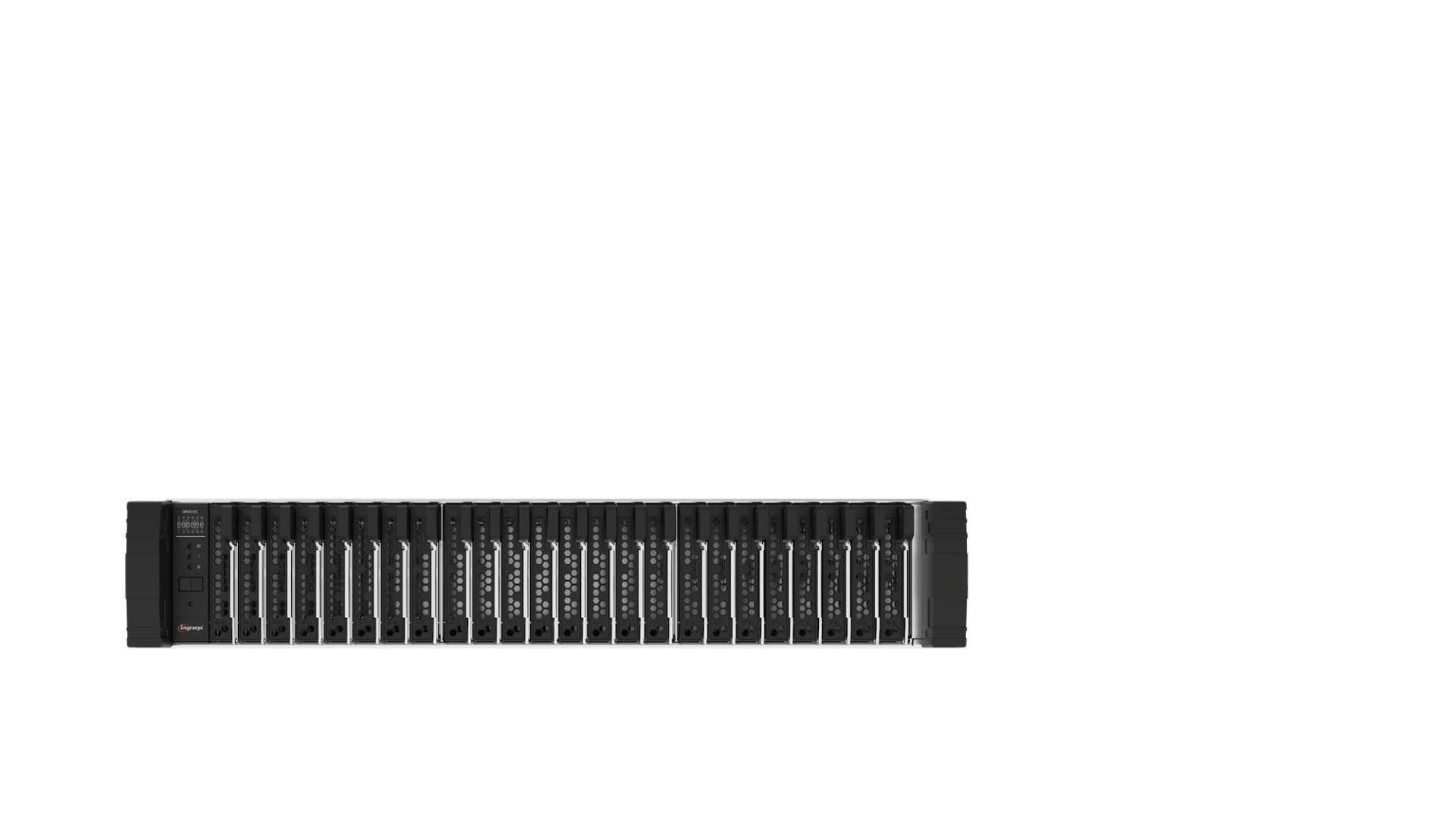

Ingrasys redefines next-generation storage with the ES2100, an NVMe-over-Fabrics (NVMe-oF) solution purpose-built for AI and HPC workloads. The 2U storage system supports E3.S and U.2 SSD form factors and features a midplane-less design for enhanced airflow and reliability. The system is equipped with two hot-swappable, redundant switch modules (SWMs) that not only provide high availability but also allow effortless future system upgrades simply by replacing the SWMs — without impacting the overall architecture.

ES2100

- Ethernet Switch: NVIDIA Spectrum-2

- Drives: 24 x U.2/E3.S NVMe SSDs

- Connectors: 24 x 200GbE QSFP56 Ports

- Power Supply: 1+1 Redundant 1600W Platinum Power Supplies

- Form Factor: 2 RU Air-cooled System