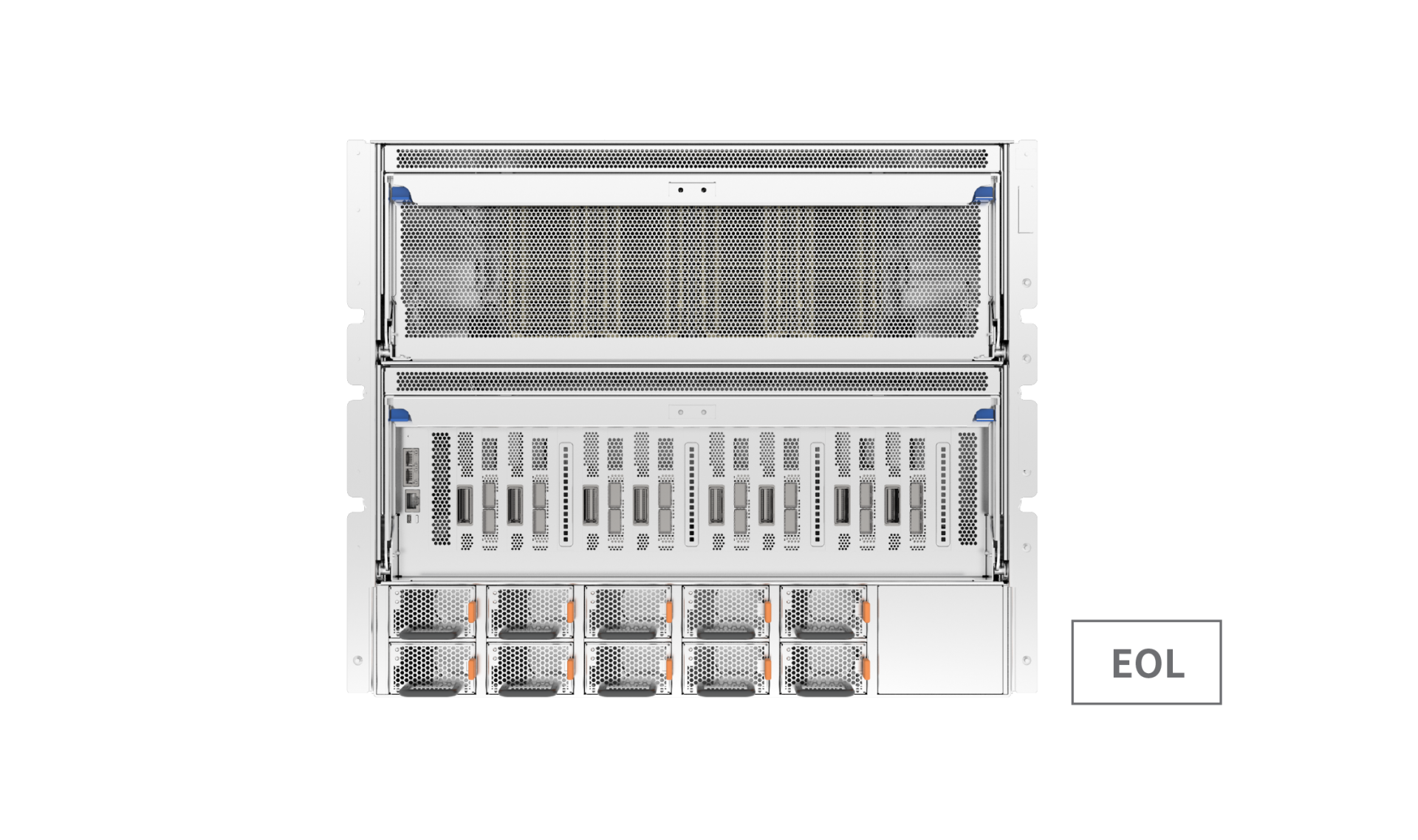

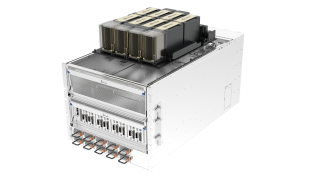

GB10181N

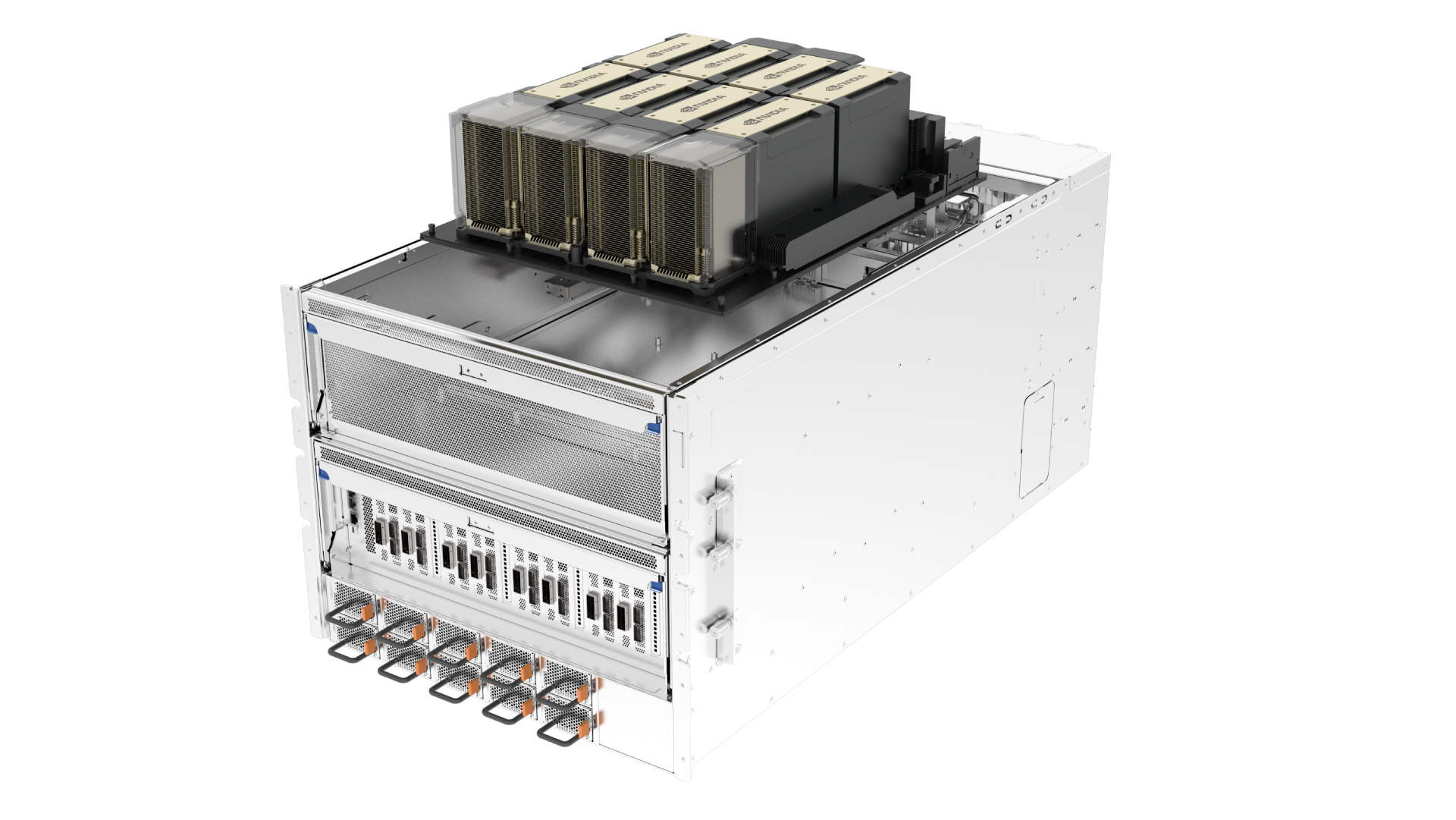

Unrivaled Air-Cooled HGX H100 8-GPU AI Server for Ultimate AI PerformanceDeploy Latest NVIDIA NVLink® & NVSwitch™ Technology

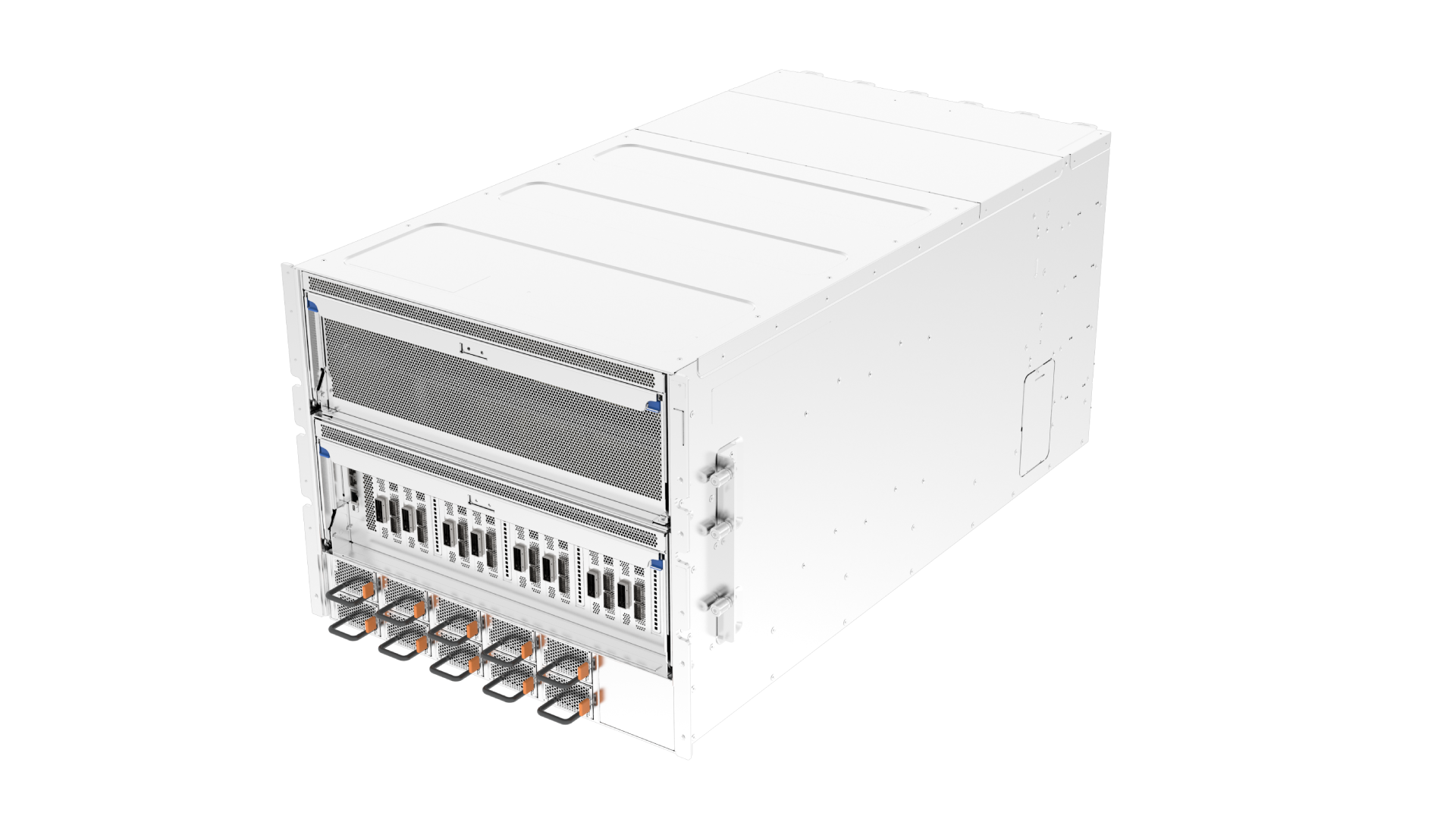

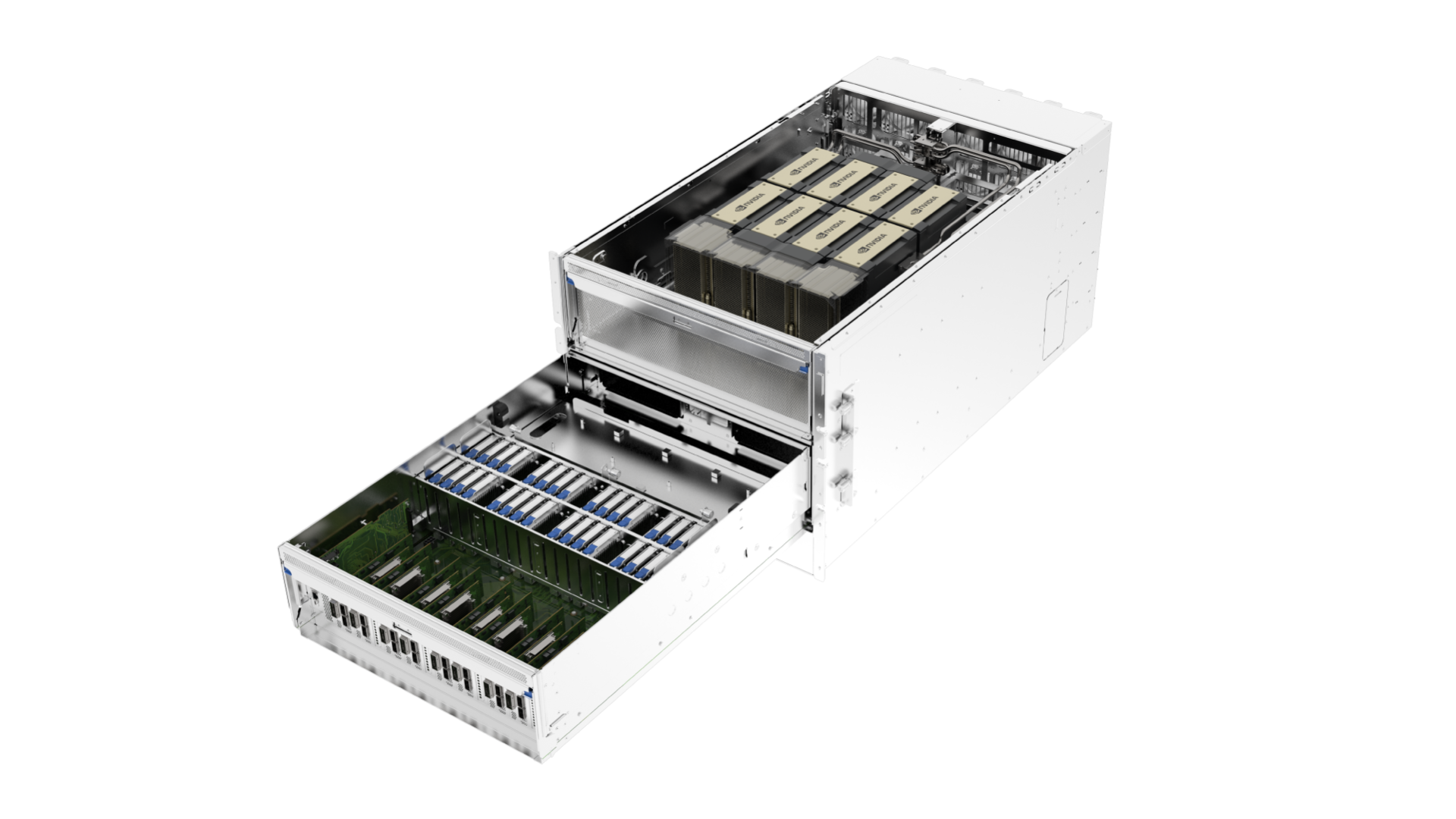

Two-layer GPU & IO Sleds for Easy Scalability and Better Thermal Efficiency

Support 20 x PCIe 5.0 Slots & 32 x NVMe Drive Bays

Air Cooling Solution

Application

Generative AI

Large Language Model (LLM)

Hyperscale Data Center

High Performance Computing

AI and Machine Learning

Unrivaled HGX H100 8-GPU AI Server

__24K05wWFva.png)

Innovative Disaggregated Approach

(Ingrasys head node solution: SV2121A/SV2121I)

High Expansion Capability

Certification

__24K03a3WVu.png)

__24K03SMIKw.png)

| Supported GPU |

NVIDIA HGX H100 8-GPU |

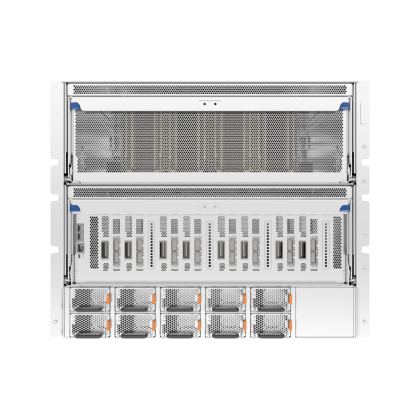

| Expansion Slots | 20 x PCIe 5.0 x16 Slots |

| Storage | 32 x Hot-swap U.2 NVMe Drive Bays |

| Front Panel |

1 x Power LED, 1 x UID LED, 1 x Attention LED |

| Form Factor | 10 U Rackmount (4U IOB Sled with 4U GPU Sled) |

| Chassis Dimensions (H x W x D) |

17.3" x 20.0"x 37.3"/ 440.5mm x 508.0mm x 948.8mm |

|

Management |

1 x ASPEED AST2600 |

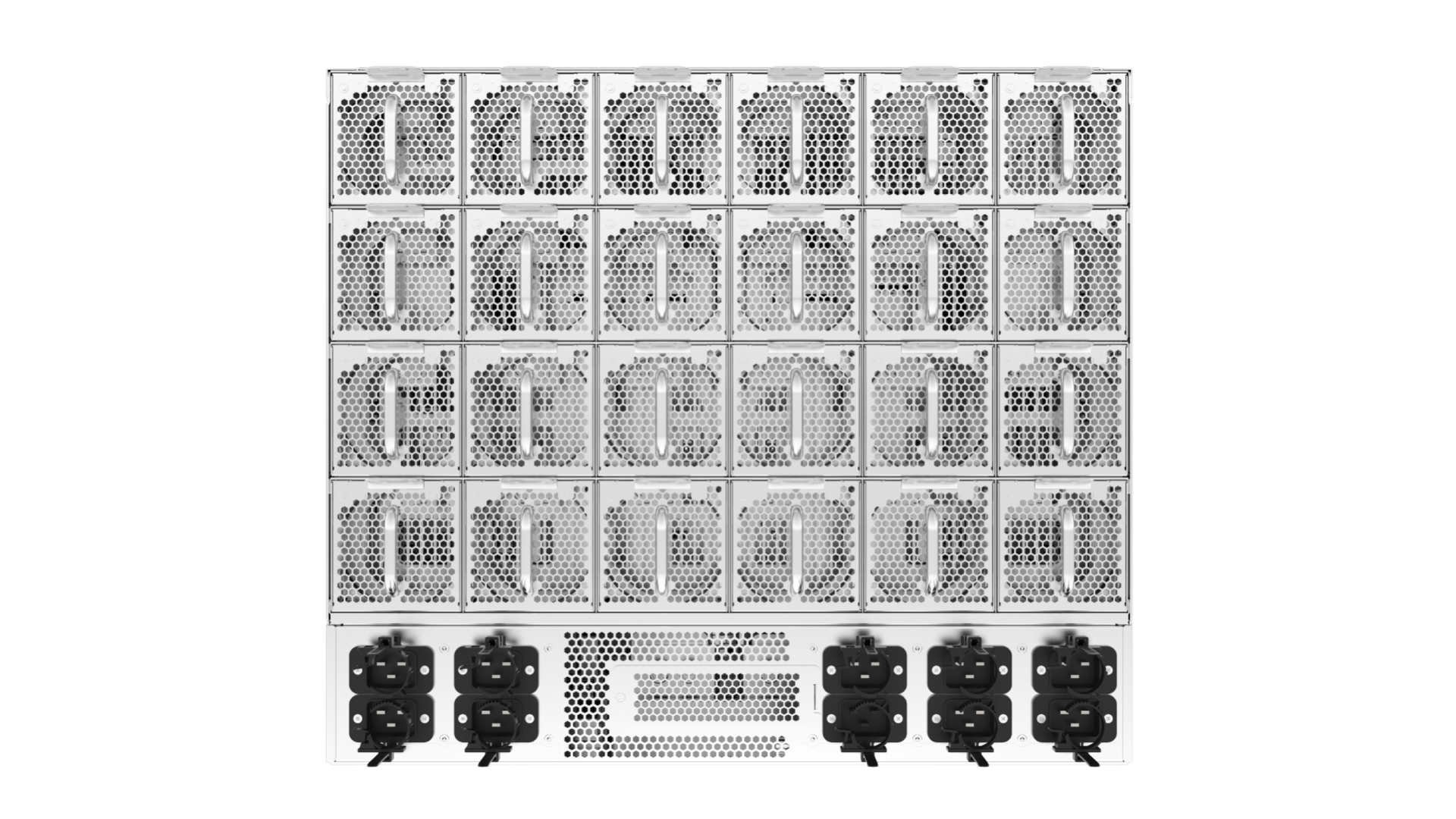

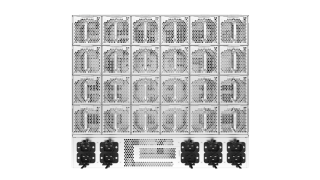

| Power Supply | 5+5 Redundant 3000W Platinum Power Supplies |

| Fans | 24 x 80*80mm for N+1 Cooling Redundancy |

| Certification | CE/FCC/RCM/BSMI/UL/IECEE CB |

|

Operating Temperature |

10°C to 35°C (50°F to 95°F) |

| Non-operating Temperature | -40°C to 60°C (-40°F to 140°F) |

|

Operating Relative Humidity |

8% to 85%RH |

| Non-operating Relative Humidity | 5% to 95%RH |